Understanding Transformers and Attention Mechanisms

Interactive Video

•

Computers

•

10th Grade - University

•

Hard

Aiden Montgomery

FREE Resource

Read more

10 questions

Show all answers

1.

MULTIPLE CHOICE QUESTION

30 sec • 1 pt

What is the primary goal of a transformer model?

To classify images

To translate text from one language to another

To predict the next word in a sequence

To generate images from text

2.

MULTIPLE CHOICE QUESTION

30 sec • 1 pt

How does the attention mechanism help in understanding context?

By updating word embeddings based on surrounding words

By focusing on the most important words

By translating words into different languages

By ignoring irrelevant words

3.

MULTIPLE CHOICE QUESTION

30 sec • 1 pt

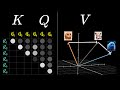

What is the role of the query matrix in the attention mechanism?

To store the original word embeddings

To map embeddings to a smaller space for context searching

To translate words into different languages

To generate new words

4.

MULTIPLE CHOICE QUESTION

30 sec • 1 pt

What is the purpose of the softmax function in the attention mechanism?

To normalize the relevance scores between words

To translate words into different languages

To increase the dimensionality of embeddings

To decrease the computational complexity

5.

MULTIPLE CHOICE QUESTION

30 sec • 1 pt

What is a key feature of multi-headed attention?

It reduces the number of parameters in the model

It focuses on a single word at a time

It runs multiple attention heads in parallel

It uses a single attention head for all computations

6.

MULTIPLE CHOICE QUESTION

30 sec • 1 pt

How many attention heads does GPT-3 use in each block?

12

24

48

96

7.

MULTIPLE CHOICE QUESTION

30 sec • 1 pt

What is the significance of the value matrix in the attention mechanism?

It stores the original word embeddings

It determines the relevance of words

It translates words into different languages

It provides the information to update embeddings

Create a free account and access millions of resources

Similar Resources on Wayground

6 questions

GCSE Physics - National Grid #20

Interactive video

•

10th Grade - University

11 questions

Understanding Large Language Models and Transformers

Interactive video

•

10th Grade - University

6 questions

Understanding Generative AI and Language Models

Interactive video

•

10th - 12th Grade

11 questions

Practical Deep Learning for Coders, Lesson 8 Quiz

Interactive video

•

10th - 12th Grade

5 questions

Deep Learning - Convolutional Neural Networks with TensorFlow - Embeddings

Interactive video

•

11th Grade - University

11 questions

Machine Learning and Grading Automation

Interactive video

•

10th - 12th Grade

5 questions

Data Science and Machine Learning (Theory and Projects) A to Z - TensorFlow: TensorFlow Text Classification Example usin

Interactive video

•

10th - 12th Grade

6 questions

Step Up and Step Down Transformers: Understanding the Differences

Interactive video

•

10th Grade - University

Popular Resources on Wayground

55 questions

CHS Student Handbook 25-26

Quiz

•

9th Grade

10 questions

Afterschool Activities & Sports

Quiz

•

6th - 8th Grade

15 questions

PRIDE

Quiz

•

6th - 8th Grade

15 questions

Cool Tool:Chromebook

Quiz

•

6th - 8th Grade

10 questions

Lab Safety Procedures and Guidelines

Interactive video

•

6th - 10th Grade

10 questions

Nouns, nouns, nouns

Quiz

•

3rd Grade

20 questions

Bullying

Quiz

•

7th Grade

18 questions

7SS - 30a - Budgeting

Quiz

•

6th - 8th Grade